Originally posted on Stanford Social Innovation Review by Stefaan G. Verhulst.

Proprietary data can help improve and save lives, but fully harnessing its potential will require a cultural transformation in the way companies, governments, and other organizations treat and act on data.

In April 2015, the Gorkha earthquake hit Nepal—the worst in more than 80 years. Hundreds of thousands of people were rendered homeless and entire villages were flattened. The earthquake also triggered massive avalanches on Mount Everest, and ultimately killed nearly 9,000 people across the country.

Yet for all the destruction, the toll could have been far greater. Without mitigating or in any way denying the horrible disaster that hit Nepal that day, the responsible use of data helped avoid a worse calamity and may offer lessons for other disasters around the world.

Following the earthquake, government and civil society organizations rushed in to address the humanitarian crisis. Notably, so did the private sector. Nepal’s largest mobile operator, Ncell, for example, decided to share its mobile data—in an aggregated, de-identified way—with the nonprofit Swedish organization Flowminder. Flowminder then used this data to map population movements around the country; these real-time maps allowed the government and humanitarian organizations to better target aid and relief to affected communities, thus maximizing the impact of their efforts.

The initiative has been widely lauded as a model for cross-sector collaboration. But what is perhaps most striking about the initiative is the way it used data—in particular, how it repurposed data originally collected for private purposes for public ends. This use of corporate data for a wider social impact reflects the emerging concept of “data responsibility.” And while its contours are still being defined, it is increasingly apparent that data responsibility can play a transformative role in fostering positive public ends—including the way we respond to natural and other disasters, and in our work toward achieving the Sustainable Development Goals formally adopted by the United Nations last year.

Defining Data Responsibility

We live, as it is now common to point out, in an era of big data. The proliferation of apps, social media, and e-commerce platforms, as well as sensor-rich consumer devices like mobile phones, wearable devices, commercial cameras, and even cars, generate zettabytes of data about the environment and about us.

Yet much of the most valuable data resides with the private sector—for example, in the form of click histories, online purchases, sensor data, and call data records. This limits its potential to benefit the public and to turn data into a social asset. Consider how data held by business could help improve policy interventions (such as better urban planning) or resiliency at a time of climate change or help design better public services to increase food security.

Data responsibility suggests steps that organizations can take to break down these private barriers and foster so-called data collaboratives, or ways to share their proprietary data for the public good. For the private sector, data responsibility represents a new type of corporate social responsibility for the 21st century.

While Nepal’s Ncell belongs to a relatively small group of corporations that have shared their data, there are a few encouraging signs that the practice is gaining momentum. In Jakarta, for example, Twitter exchanged some of its data with researchers who used it to gather and display real-time information about massive floods. The resulting website, PetaJakarta.org, enabled better flood assessment and management processes. And in Senegal, the Data for Development project has brought together leading cellular operators to share anonymous data to identify patterns that could help improve health, agriculture, urban planning, energy, and national statistics.

Examples like this suggest that proprietary data can help improve and save lives. But to fully harness the potential of data, data holders need to fulfill at least three conditions. I call these “the three pillars of data responsibility.”

The Three Pillars of Data Responsibility

1. Share. This is perhaps the most evident: Data holders have a duty to share private data when a clear case exists that it serves the public good. There now exists manifold evidence that data—with appropriate oversight—can help improve lives, as we saw in Nepal.

2. Protect. The consequences of failing to protect data are well documented. The most obvious problems occur when data is not properly anonymized or when de-anonymized data leaks into the public domain. But there are also more subtle cases when ostensibly anonymized data is itself susceptible to de-anonymization, and information released for the public good ends up causing or potentially causing harm.

One such case occurred in 2013 in New York when the Taxi and Limousine Commission released supposedly anonymized information in response to a public request. The information had been collected from various taxi companies and ride-sharing firms and included data on pickup and drop-off times, locations, fares, and tip amounts. It wasn’t long before several civic hackers effectively de-anonymized the data and identified taxi licenses and medallion numbers. The consequences were worrisome. For example, the data could be used to calculate a driver’s annual income or religion. The hacker groups also demonstrated that the data could be used to identify consumer travel and spending habits, including those of several celebrities, and that it was possible to use the data to identify passengers who frequented “gentlemen’s clubs.”

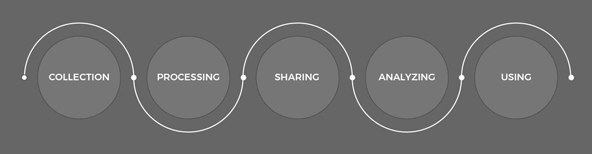

This is just one example. A powerful sense of responsibility at every stage of the information chain—collection, analysis, sharing, and use—must accompany the good intentions guiding data releases. It is also important to keep in mind that some data is perhaps best not collected or shared at all—again, data responsibility does not imply a blanket obligation to collect or share all data.

There are data risks at every stage of the data value cycle, from collection to use. (Image courtesy of GovLab)

3. Act. For the data to really serve the public good, officials and others must create policies and interventions based on the insights they gain from it. Without action, the potential remains just that—mere potential, never translated into concrete results.

This is particularly evident in the struggle against corruption. Around the world, data sets released by governments and others have played a powerful role in increasing transparency. Brazil’s Transparency Portal, for instance, was created in 2004 to increase fiscal transparency of the federal government by sharing budget data. The portal is now one of the country’s primary tools to identify and document corruption, registering an average of 900,000 unique visitors every month. Similarly, Mejora Tu Escuela, in Mexico, is an online platform that provides citizens with information about school performance. It helps parents choose the best options for their children and empowers them to demand higher-quality education. Perhaps more importantly, the platform provides school administrators, policymakers, and NGOs with data to identify hotbeds of corruption. For instance, the platform has played an important role in identifying “ghost teachers” on government payrolls, and teachers who are paid apparently outsized salaries.

Yet translating insights like these into impact often requires pursuing vast and difficult changes in the face of vested interests and institutional obstacles. As one individual involved in Mejora Tu Escula said, “If you have more transparency, that doesn’t mean that you have more accountability.” Despite the success of its Transparency Portal, for example, corruption is still widespread. The 2015 Transparency International Corruption Perceptions Index places Brazil at 76 of 168 countries, putting it on par with countries like India and Burkina Faso, and below countries like El Salvador and Jamaica. It also notes that the country’s position has deteriorated rather than improved in recent years, particularly in light of the vast Petrobras Oil scandal that has made headlines around the world. This is an important indication of the need for action and of how the first two pillars of data responsibility rely on the third.

The Need for a Culture Shift

The difficulty of translating insights into results points to some of the larger social, political, and institutional shifts required to achieve the vision of data responsibility in the 21st century. The move from data shielding to data sharing will require that we make a cultural transformation in the way companies, governments, and other organizations treat and act on data. We must incorporate new levels of pro-activeness, and make often-unfamiliar commitments to transparency and accountability.

By way of conclusion, here are four immediate steps—essential but not exhaustive—we can take to move forward:

- Data holders should issue a public commitment to data responsibility so that it becomes the default—an expected, standard behavior within organizations.

- Organizations should hire data stewards to determine what and when to share, and how to protect and act on data.

- We must develop a data responsibility decision tree to assess the value and risk of corporate data along the data lifecycle.

- Above all, we need a data responsibility movement; it is time to demand data responsibility to ensure data improves and safeguards people’s lives.